Multi Agent LLM embedded with Ethical AI

Implementation approach using Google Cloud Run

Multi-agent LLM systems represent a significant leap forward in AI architecture, leveraging the collective capabilities of specialized LLMs to tackle complex challenges beyond single-model solutions. This approach strategically assigns roles to different LLM agents, facilitates inter-agent communication, and implements collaborative problem-solving algorithms.

Key drivers behind the adoption of multi-agent LLM systems:

Enhanced Problem-Solving Capabilities: Integration of diverse, specialized agents expands our AI solution space, addressing multifaceted problems requiring varied domain expertise.

Improved Reasoning and Accuracy: Cross-validation and iterative refinement between agents reduce false positives and enhance overall reliability of AI-generated results.

Flexibility and Scalability: Modular architecture allows dynamic reconfiguration and seamless integration of new agents, adapting to evolving business needs and technological advancements.

Emulation of Human Collaborative Dynamics: Replication of human expert team synergies achieves more nuanced, context-aware, and innovative problem-solving approaches.

Mitigation of Single LLM Limitations: Distributed task allocation across multiple, focused models addresses constraints like context window limitations and specialized knowledge requirements.

Importantly, the integration of constitutional AI principles, as proposed by LangChain, offers a promising avenue for improving ethics and reducing bias in these systems:

Ethical Framework Integration: Constitutional AI allows us to embed ethical guidelines and principles directly into the decision-making processes of our multi-agent systems. This ensures that AI actions align with predefined ethical standards, reducing the risk of unintended consequences.

Bias Mitigation: By implementing constitutional AI, we can establish checks and balances within the multi-agent system. This helps identify and mitigate biases that might be present in individual LLMs, leading to more fair and equitable AI outputs.

Transparent Decision-Making: Constitutional AI principles promote explainability in AI systems. In a multi-agent context, this translates to clearer insights into how different agents contribute to decisions, enhancing accountability and trust.

Adaptive Ethical Behavior: As the multi-agent system encounters new scenarios, constitutional AI frameworks can help it adapt its ethical considerations appropriately, ensuring consistent adherence to core principles across diverse applications.

From a technical standpoint, implementing multi-agent LLM systems with constitutional AI presents opportunities for optimizing resource allocation, enhancing model interpretability, and developing more robust, ethically-aligned AI governance frameworks. As CIOs and technology leaders, we should evaluate how this paradigm shift aligns with our long-term AI strategy, focusing on use cases that could benefit from this advanced, ethically-grounded collaborative AI approach. This integration not only pushes the boundaries of AI capabilities but also ensures that our AI systems remain accountable, fair, and aligned with human values as they grow in complexity and autonomy.

Given below is the target architecture to leverage multi-agent LLM along with constitutional chain

The Multi-Agent LLM Architecture represented above explains a cutting-edge approach to AI-driven question-answering systems. It leverages distributed processing, ethical AI principles, and advanced language models to deliver accurate, unbiased, and contextually relevant responses at scale.

Key Components:

Distributed Query Processing:

User Interface: Handles concurrent user inputs.

Decomposer: Breaks complex queries into manageable sub-questions.

Multi-Agent System: Parallel processing of sub-questions with built-in ethical checks.

Aggregator: Consolidates agent responses into coherent outputs.

LLM as a Service (LLMaaS):

Primary Model: Google Gemini (SLM) for core query processing.

Secondary Model: Groq/LLAMA2 (70B) for validation and augmentation.

Fine-tuning Capability: Allows model customization for specific use cases.

Continuous Learning: Integrates new training data for ongoing improvement.

Constitutional AI Implementation:

Ethical Validator: Checks responses against ethical guidelines.

Fact Checker: Verifies factual claims in responses.

Safety Filter: Ensures responses don't contain harmful or inappropriate content.

Bias Detector: Identifies and mitigates potential biases in responses.

Session and History Management:

Interaction History: Maintains context for improved response relevance.

Integrated Session Management: Orchestrates system components and user interactions.

Key Architectural Features

Scalability: The multi-agent approach allows for horizontal scaling to handle increased load.

Flexibility: Modular design enables easy integration of new models or replacement of existing components.

Ethical AI: Constitutional chains ensure responses adhere to predefined ethical guidelines, factual accuracy, and safety constraints.

Continuous Improvement: Feedback loops and fine-tuning capabilities allow for ongoing system enhancement.

High Availability: Distributed architecture mitigates single points of failure.

Performance Optimization: Parallel processing of sub-questions improves response times for complex queries.

Technical Considerations:

API Management: Robust API gateway needed to manage LLMaaS interactions and ensure consistent performance.

Data Privacy: Implement strong encryption and access controls for user data and interaction history.

Load Balancing: Implement intelligent load balancing for optimal distribution of queries across agents.

Monitoring and Logging: Comprehensive monitoring of system performance, model accuracy, and ethical compliance.

Versioning: Implement versioning for models, constitutional rules, and agent logic to manage updates and rollbacks.

Caching Strategy: Implement intelligent caching to improve response times for common queries.

Failover Mechanisms: Design redundancy and failover processes to ensure system reliability.

Computational Resources: Plan for significant GPU/TPU resources to support multiple LLMs and parallel processing.

Demo of Build and Deployment in Google Cloud Run

A simple app which uses the enhanced version utilizing the multi-chain LLMs.

Improving Stability and Resiliency of the application

While Google Cloud run offers inbuilt container stability and reliability, timeouts are bigger concerns when deploying at production scale.

Following aspects have to be considered while building a more resilient and stable application

I have implemented a retry logic and circuit breaker logic for an LLM API on Google Cloud Run, focusing on the front-end UI interaction with the backend LLM hosted on serverless, and ensuring the app remains active for at least 5 minutes:

Front-end UI:

Implement a loading indicator to show when a request is in progress

Use exponential backoff for retries

Set a maximum number of retry attempts (e.g., 3)

Display appropriate messages based on circuit breaker state

Backend (Cloud Run):

Implement request queuing to manage concurrent requests

Use a circuit breaker pattern to prevent overwhelming the LLM service

Implement timeouts for LLM API calls

Circuit Breaker Pattern:

Implement three states: Closed, Open, and Half-Open

Closed: Normal operation, requests pass through

Open: Requests are immediately rejected without calling the LLM API

Half-Open: Allow a limited number of test requests to pass through

Define thresholds for opening the circuit (e.g., error rate, response time)

Use a sliding window to track recent requests and errors

Implement automatic transition from Open to Half-Open after a cooldown period

LLM API Interaction:

Use async/await for non-blocking API calls

Implement error handling for various failure scenarios

Log errors and retry attempts for monitoring

Update circuit breaker state based on API call results

Keeping the App Active:

Implement a heartbeat mechanism to ping the service every 4 minutes

Use Cloud Scheduler to trigger the heartbeat

Implement a simple health check endpoint

Error Handling:

Categorize errors (e.g., network issues, LLM service errors)

Implement appropriate retry strategies for each error type

Provide user-friendly error messages in the UI

Update circuit breaker state based on error types and frequency

Monitoring and Logging:

Use Cloud Monitoring to track API calls, errors, and latency

Set up alerts for high error rates, extended downtime, or circuit breaker state changes

Implement detailed logging for troubleshooting

Monitor circuit breaker state transitions and failure rates

Circuit Breaker Configuration:

Error Threshold: Set a percentage of failures (e.g., 50%) that triggers the Open state

Timeout Duration: Define how long the circuit stays Open before transitioning to Half-Open

Reset Timeout: Set a duration for successful operations in Half-Open state before fully closing the circuit

Failure Count: Define the number of consecutive failures that trigger the Open state

To effectively manage retries in a system, it's essential to balance retry limits, timing, and error identification along with circuit breaker. Here's how to optimize retry strategy for better resilience:

Set a Reasonable Retry Limit: Determine a sensible number of retries to handle temporary issues without causing excessive load or delays. Too few retries might not resolve transient problems, while too many can overwhelm the system and delay recognition of persistent issues. Typically it is 3.

Implement Exponential Backoff with Jitter: Instead of retrying immediately after a failure, use an exponential backoff strategy combined with jitter. Exponential backoff gradually increases the wait time between retries, preventing a surge of retry attempts that could overwhelm the system. However, even with exponential backoff, there's still a risk of the "stampeding herd" effect, where multiple requests retry at similar intervals and flood the system. To mitigate this, introduce jitter—randomness in the backoff intervals. Jitter ensures that retries are spread out over time, preventing synchronized retry attempts across distributed systems. By adding randomness, you reduce the likelihood that all clients will retry at the same time, which can help keep the system stable under load.

Identify Retriable Errors: Not all errors should trigger a retry. Focus on retrying only transient errors that are likely to resolve over time, such as:

408 (Request Timeout)

5XX errors (indicating server-side issues)

Avoid retrying non-recoverable errors, such as:

400 (Bad Request)

403 (Forbidden)

Retrying these non-recoverable errors is futile as they represent permanent issues that need a different resolution approach.

Combine with a Circuit Breaker: Pairing the Retry Pattern with a circuit breaker mechanism enhances system resilience. A circuit breaker monitors the health of a service and temporarily halts requests if failures exceed a specific threshold. This pause prevents further strain on a failing service, allowing time for recovery before retrying. When a service recovers, the circuit breaker closes, and normal operations resume.

Ensure Idempotence: When implementing retries, idempotence is crucial. Idempotence means that performing the same operation multiple times has the same effect as doing it once. Ensuring that your system operations are idempotent prevents unintended side effects from retries, such as duplicate transactions or data corruption. This is especially important in distributed systems where network issues or other errors can cause multiple retries.

By carefully managing retry limits, timing, error identification, using jitter, employing circuit breakers, and ensuring idempotence, you can minimize disruptions and maintain optimal system performance.

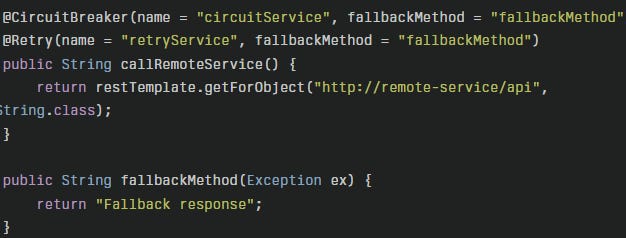

Implementation Specifications

Implementation of Retry and CircuitBreaker logic is possible by Adding the Resilience4J Dependency in the frontend JavaScript code.

With this setup:

The retry will allow the

callRemoteServicemethod to be retried up to 3 times if it throwsHttpServerErrorExceptionThe circuit breaker will monitor if the failure rate exceeds 50% over a window of 10 requests

If the failure threshold is exceeded, the circuit will open for 20 seconds, during which it will immediately fail without attempting the call and invoke the

fallbackMethodAfter 20 seconds, it will allow a few requests through to test if the service has recovered

If the circuit is closed but all retry attempts fail, the

fallbackMethodwill also be invoked

Some additional considerations:

Order the annotations with

@CircuitBreakerfirst and@Retrysecond, so the retry happens within the circuit breakerEnsure the circuit breaker and retry are configured with appropriate values based on the characteristics of the remote service

Monitor the circuit breaker and retry metrics to tune the configurations

Consider adding a bulkhead to limit the number of concurrent calls to the remote service

In summary, by adding the @CircuitBreaker annotation along with the @Retry annotation and providing a shared fallbackMethod, you can implement a resilient call to the backend LLM API that will retry on failures, trip the circuit on too many failures, and provide a fallback response.

Why LangChain Constitutional Chain?

The LangChain Constitutional Chain serves as a powerful mechanism for enhancing the ethical and operational standards of AI systems. By enforcing a constitution of ethical guidelines, reducing biases, and minimizing hallucinations, it ensures that the AI outputs are not only aligned with societal values but also reliable and trustworthy. This makes the LangChain Constitutional Chain an essential component for deploying responsible AI systems that can be trusted by users across various domains.

Some of the sample Github Repos and solutions that can help you accelerate your journey to multi-agent LLM

Langroid (github.com)

Langroid is an open-source project available on GitHub that focuses on creating frameworks and tools to manage interactions between multiple language models (LLMs) and agents in a systematic and scalable way. It provides a structured approach to deploying and orchestrating LLMs for complex tasks that require multiple steps or involve different components working together.

GoogleCloudPlatform/llm-pipeline-examples (github.com)

This repo contains examples of ssh server that allows deepspeed launcher to launch LLM training inside containers on Google Cloud, with logging to collect logs from the containers

rnadigital/agentcloud: Agent Cloud is like having your own GPT builder with a bunch extra goodies. The GUI features 1) RAG pipeline which can natively embed 260+ datasources 2) Create Conversational apps (like GPTs) 3) Create Multi Agent process automation apps (crewai) 4) Tools 5) Teams+user permissions.

Hope above is helpful in your journey to Multi-Agent LLM.

Reach out to me if you have any questions!